If you’ve just launched a website or a new page, the key to being seen on Google search results starts with a crucial step: crawling and indexing.

In simple terms, before your content can show up in search results, Google needs to discover and store it in its vast database. Understanding how this process works gives you the power to improve your online presence.

Read on to learn more about what Google crawling and indexing means, how often it happens, and what you can do to increase Google’s crawling rate.

Key Takeaways:

- Your website or webpage won’t appear on Google if it hasn’t been crawled and indexed.

- The crawling and indexing process involves Google exploring and storing information from web pages. It’s a continuous process aiming to keep the search results updated and relevant.

- Google automatically crawls and indexes pages, but this process takes time. The frequency varies based on several factors, and there’s no fixed timetable for it.

- Take control by requesting Google to index your new or updated pages. While it’s not guaranteed, it might still help your pages show up in search results faster.

- There are other ways you can encourage Google to crawl your site more often: create fresh, high-quality content, get good backlinks, adopt a mobile-first design approach, and submit an XML sitemap

- Use Google Search Console (GSC) to check when Google last crawled your site as well as indexing issues that are hindering your search results visibility.

Table of Contents

- What is Google crawling?

- How does Google crawling work?

- What is Google indexing?

- How often does Google crawl websites?

- Factors that affect Google crawl rate and how to increase it

- How to check when Google last crawled your site

- How to check when Google last crawled your site

- How often does Google index websites?

- How often does Google reindex?

- Request indexing to get Google to crawl your site.

- Why requesting indexing is necessary

- Steps on how to index website on Google

- How to check if your site has been indexed by Google

- Why hasn’t Google crawled my site yet?

- How often does Google update search results?

What is Google crawling?

Google crawling, also known as web crawling, is the process where special software programs called bots or spiders systematically navigate the internet, visiting web pages and analysing their content.

These bots, particularly the Googlebot, are sent out by Google to scour the internet. It collects information from websites, indexes it and ranks it in search results.

They continuously explore the web to make sure that when users like you and me search for something, we get the most relevant results.

How does Google crawling work?

So, what actually happens when Googlebots crawl web pages? Here’s an overview:

- Googlebot starts looking at a few web pages it has crawled before. It reviews information on the page and sees if there are any new links to discover.

- It goes to these new links that lead to other pages. As a result, it finds new pages, expanding its list of URLs visited.

- Once it finds a page, it reads or checks what’s written on it. It analyses the page’s content and finds out what it is about.

- While reading, it looks for more links to follow. Now, it has even more pages to crawl and add to its URL list.

- The cycle continues. This process of following links and reading pages goes on and on, creating a big map of the internet.

It organises information gathered from all the pages crawled. This way, it can quickly find and show the most relevant results for a user searching for something.

What is Google indexing?

When Google crawls a webpage, it doesn’t just read it. It also stores a copy of the page’s content in its vast library, which is the index.

So, indexing is simply the process where Google collects, organises and stores information like page titles, descriptions and content in the Google index.

When a user enters a query, Google will search the index to find the most relevant pages and return them as search results.

Therefore, if your web page is not indexed by Google, it will not appear in search results. And if you don’t appear in search, your users will not be able to find you.

Good thing there are measures you can take to avoid this. You’ll know more about these later on.

How often does Google crawl websites?

Google’s crawling frequency isn’t consistent – it can happen within a day, a few weeks, or even months…

And there’s no fixed schedule nor a timetable to rely on.

So, as much as we want to have control over SEO, predicting Google’s crawl timing is impossible. It’s dictated by Google’s algorithms, beyond our influence.

However, there are certain factors that influence how often Google crawls a website…

And luckily, we have the power to address and optimise for them.

Factors that affect Google crawl rate and how to increase it

To optimise how frequently Google crawls your website, it’s essential to focus on various factors that influence crawling frequency. Here’s a breakdown of these factors and actionable tips to enhance crawling rates:

1. Website freshness and updates

Websites with frequently updated content are crawled more often. Fresh content indicates an active site, prompting Google to crawl more frequently.

Tips:

- Publish new content regularly, whether it’s blog posts, articles, or updates to existing pages.

- Share your new content on social media and other relevant platforms to attract attention. This also encourages Google to crawl your site more frequently.

- Use a content management system (CMS) that allows you to schedule posts in advance. This ensures a consistent flow of fresh content.

2. Website authority and quality

Established and high-quality websites are crawled more regularly as they are deemed more reliable sources of information.

Tips:

- Create high-quality, in-depth, original content that solves your audience’s problems or challenges. This demonstrates topical authority.

- Establish yourself as an expert in your niche by contributing to industry publications and participating in online forums.

- Earn backlinks from reputable websites in your industry. Backlinks are positive signals to Google that your website is credible and authoritative.

3. Backlink profile

Websites with a diverse and authoritative backlink profile often experience more frequent crawling. Backlinks act as pathways for bots to discover and crawl a site.

Tips:

- Reach out to other websites in your niche and offer to write guest posts or participate in content collaborations.

- Submit your website to relevant directories and industry-specific websites.

- Monitor your backlink profile regularly and remove any low-quality or spammy links.

4. Page popularity and traffic

Popular pages with higher traffic tend to be crawled more frequently since Google aims to keep the content on these pages up to date. For example, a popular news website like CNN or BBC will be crawled more frequently than a personal blog with a small audience.

Tips:

- Promote your content on social media, email marketing, and other relevant channels to drive traffic to your website.

- Optimise your website for relevant keywords to improve its visibility in search results.

- Engage with your audience through comments, forums, and social media interactions to build a loyal following.

5. Server speed and performance

A slow server response time can limit the crawl rate as Googlebot spends more time waiting for pages to load.

Tips:

- Choose a reliable hosting provider with a proven track record of fast performance.

- Optimize your website’s code and images to reduce loading times.

- Implement caching mechanisms to store frequently accessed data and reduce server load

6. Site health and performance

Websites with good technical health, such as fast loading speeds and minimal server errors, are crawled more efficiently and at a higher rate.

Tips:

- Keep your website’s software and plugins up to date to ensure compatibility and security

- Address any technical issues promptly, such as broken links, slow-loading pages or server errors.

- Keep your website’s software and plugins up to date to ensure compatibility and security.

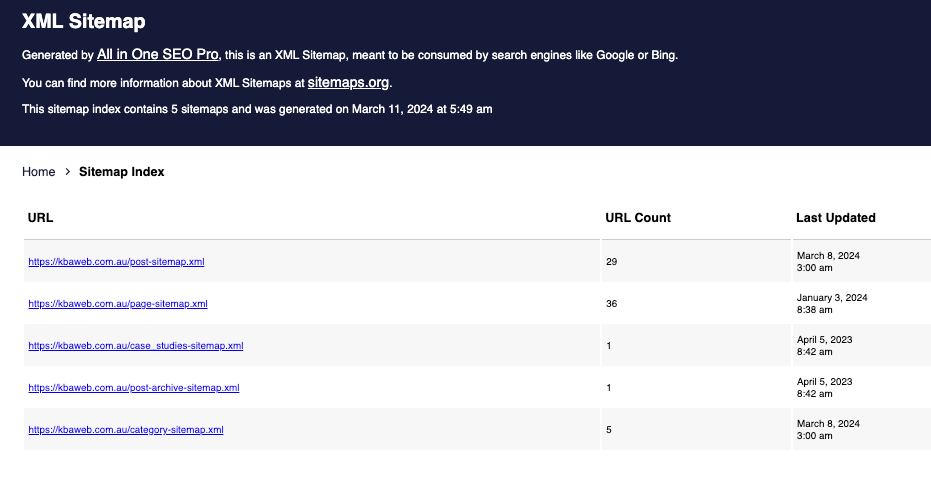

7. XML Sitemap

Sitemap informs Googlebot about the pages you want to be crawled and indexed. This helps Googlebot prioritise crawling, especially for pages that might not be easily discoverable through internal linking alone.

Tips:

- Create an XML sitemap that includes all the important pages on your website.

- Submit your XML sitemap to Google Search Console to inform Googlebot about your website’s structure and content.

- Keep your XML sitemap updated as you add new pages or make changes to existing ones.

8. Internal linking

Effective internal linking allows Googlebot to follow a natural path through your content. If your website connects related pages, Google will likely crawl through it more efficiently. In contrast, a website lacking proper internal links may face a slower crawl.

Tips:

- Use relevant anchor text when linking between pages, providing context for both users and search engines.

- Link to related pages within your content to guide users through your website and distribute link equity.

- Create a clear hierarchy of pages, ensuring that important pages are easily accessible through internal links.

9. Mobile-friendliness

With Google’s mobile-first indexing, websites optimised for mobile devices are favoured by Googlebot and crawled more frequently.

Tips:

- Implement responsive design to adapt your website’s layout to different screen sizes.

- Use a mobile-friendly font size and spacing to ensure readability on smaller screens.

- Test your website’s mobile-friendliness regularly.

10. Site structure and content

Websites with a clear and organised structure, including clear headings and high-quality, unique, relevant content, are prioritised for crawling.

Tips:

- Use clear headings and subheadings to organise your content and make it easy to scan.

- Break up long blocks of text with images, videos or other visual elements.

- Create a consistent navigation structure that allows users to easily find the information they need.

11. Robots.txt file

The robots.txt file can be used to control which pages Googlebot should or should not crawl. Configuring this can prevent Googlebot from wasting time crawling irrelevant pages.

Tips:

- Create a robots.txt file and place it in the root directory of your website.

- Use the robots.txt file to specify which pages you want to exclude from crawling.

- Regularly review your robots.txt file to ensure it accurately reflects your crawling preferences.

How to check when Google last crawled your site

Checking when Google last crawled your site allows you to monitor how active Google is in visiting and indexing your pages. Apart from that, you’ll be able to find out if your site is experiencing any crawling issues.

How to check when Google last crawled your site

Checking when Google last crawled your site allows you to monitor how active Google is in visiting and indexing your pages. Apart from that, you’ll be able to find out if your site is experiencing any crawling issues.

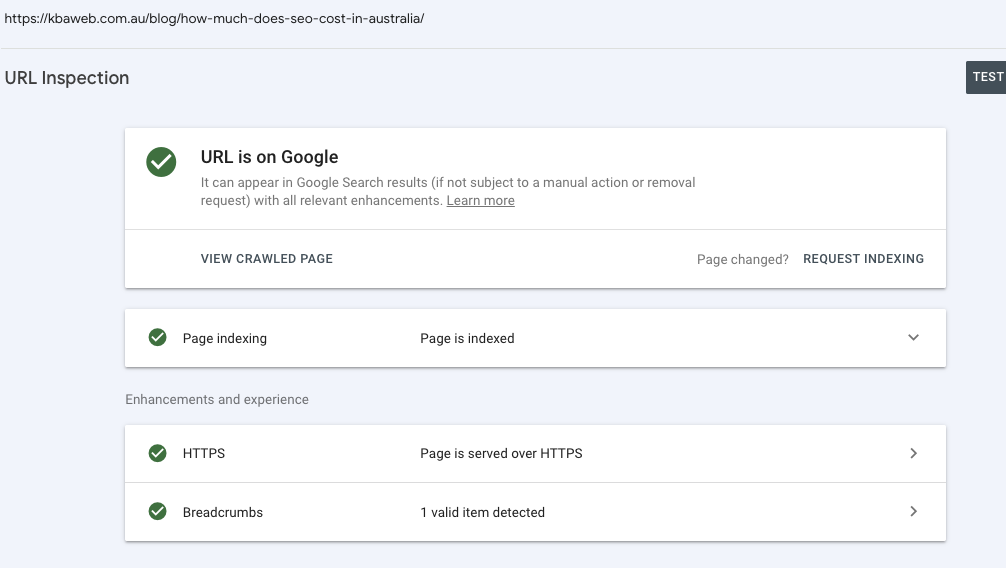

Steps to do this:

- Log in to Google Search Console.

- Utilise the URL Inspection Tool.

In the left-hand menu, click on ‘URL Inspection’.

Then, enter the URL of the page you want to check for the last crawl date into the search bar at the top.

- Expand the ‘Coverage’ section.

- Scroll down to ‘Crawl’ information.

Look for the “Last crawl” date. This indicates the last time Googlebot crawled the specific page you entered.

If you want to check the crawl date for other pages, repeat the process by entering different URLs into the search bar.

Note that the URL Inspection Tool only provides crawl information for individual pages, not for the entire website.

To get a broader overview of crawl activity, you can use the Crawl Stats report in Google Search Console:

- Click on ‘Settings’ in the left-hand menu

- In the ‘Crawling’ section, click on ‘Open Report’ under ‘Crawl Stats.’

Now, you can analyse the crawl stats report, which provides a graphical overview of crawl activity over time. This includes the number of pages crawled per day, kilobytes downloaded per day and average response time.

You can adjust the date range to view crawl activity for specific periods.

How often does Google index websites?

Google does not index websites on a fixed schedule. The frequency of indexing depends on various factors, including the website’s popularity, authority, content freshness, technical health and many others mentioned earlier.

How often does Google reindex?

While Google does revisit indexed pages for updates, how often this happens varies for each page. In fact, it can take weeks before new pages or changes are shown in Google search, according to Google’s John Mueller,

Mueller further explained that Google focuses on crawling important pages more often. So, changes in these pages are reflected in search results faster compared to less important ones.

Request indexing to get Google to crawl your site.

Google automatically crawls and indexes websites through Googlebot but it may take time. You can opt to manually submit a request for indexing to bring your pages to Google’s attention more quickly.

Why requesting indexing is necessary

Before we go into how you can manually request an index, let’s see first why this matters. Requesting a check is useful when you’re in these situations.

- Updated Content

If you have published new or updated content, requesting indexing can potentially expedite its inclusion in search results, ensuring users see the latest information.

- Technical Issues

If you have resolved technical issues that were preventing indexing, requesting indexing can notify Google to recrawl and index your pages properly.

- New Websites

When launching a new website, requesting indexing can help Google discover and index your pages.

Steps on how to index website on Google

Follow the steps below to request indexing in GSC.

- Access Google Search Console.

- Navigate to ‘URL Inspection’ tool in the left-hand menu.

- Enter the URL of the page you want to index into the search bar at the top. Then, press ‘Enter’ or click on the “Inspect” button.

- Click on the ‘Request Indexing’ button.

- Monitor the status. Google will process the indexing request. You can check the status by revisiting the URL Inspection Tool later.

Keep in mind that requesting indexing does not guarantee immediate indexing. However, it can significantly increase the chances of your pages being indexed faster.

How to check if your site has been indexed by Google

There are two ways to check if your site has been indexed by Google:

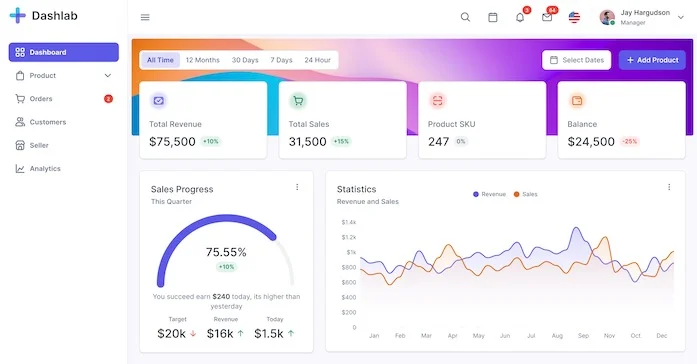

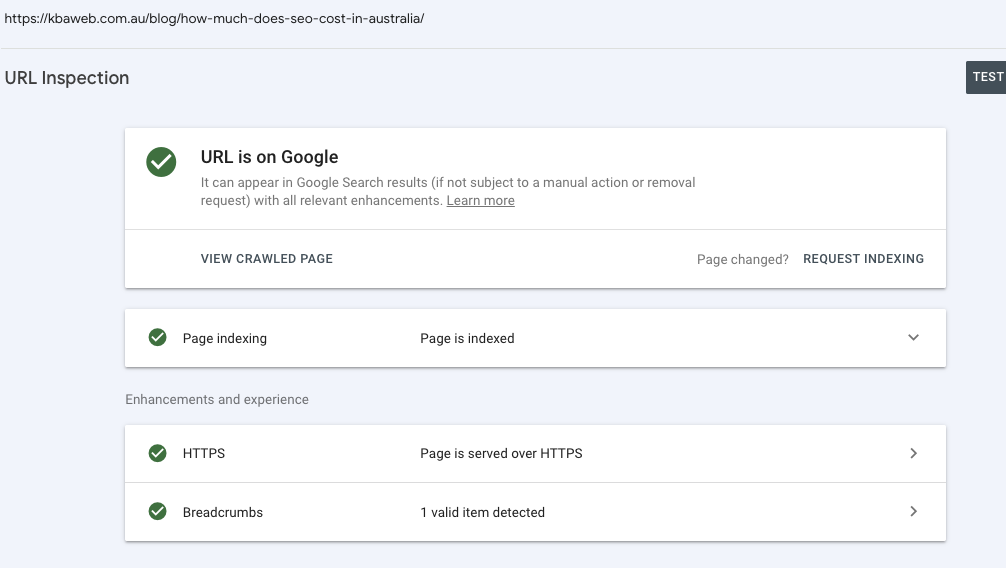

Using GSC

- Log in to your GSC.

- Input the URL of the page you want to check in the ‘Inspect any URL’ search bar at the top. Then, press enter.

- Confirm if your site has been indexed.

The page will say “Page is indexed” if your webpage is in Google’s index. If not, it will say otherwise.

Why hasn’t Google crawled my site yet?

Below are common reasons why Google may not be indexing your site:

- You have a new website.

If your website is brand new, it may take some time for Google to discover and crawl it.

- Your site or page has technical problems.

Broken links, server errors, slow loading speeds and other technical issues can hinder Googlebot’s ability to crawl and index your pages effectively.

- You misconfigured your robots.txt file.

The robots.txt file can instruct Googlebot to avoid crawling certain pages or sections of your website. Make sure it’s not blocking Googlebot from accessing important pages.

- Your site does not have a good internal linking structure.

Without proper internal links, Googlebot may struggle to find all the pages on your website and identify which ones are important. This can result in incomplete crawling and indexing.

- You have low-quality content.

Google’s algorithms are designed to identify and prioritise content that is relevant, informative, and valuable to users. Low-quality content often lacks these attributes, making it less likely to rank well or even be indexed at all.

- Your website has been penalised by Google.

If Google has penalised your website for violating its guidelines, it may be excluded from crawling and indexing.

How often does Google update search results?

Google search results may be updated within a day or even within hours. But sometimes, it might take a few weeks or months for rankings to shift.

How often Google updates its search results depends on how big the changes to its algorithm are and how fast it crawls and indexes new content.

As Google discovers and indexes new or updated content, it incorporates this information into its search results, leading to updates in the rankings and presentation of search results.

Final thoughts

Understanding crawling and indexing is vital for your website’s visibility. Imagine having a website that Google can’t find due to crawl or index issues – it defeats the purpose of being online.

Armed with this knowledge, you must regularly check your website’s crawling and indexing status. This is a crucial part of website maintenance for your long-term SEO strategy.

If handling website maintenance feels overwhelming, seek assistance from reliable SEO services. At KBA Web, we manage crawling, indexing, and overall website maintenance so you can avoid the hassle and focus more on running your business!