Your backlink profile is strong and your content is A+. Yet, your page struggles to take off, and worse, high-ranking pages are dropping. It’s time for you to investigate a silent SEO killer: duplicate content.

We call it as such because it does the damage without you even realising it.

But like any technical SEO issue, there’s a way to spot and fix these duplicates. We’ll walk you through it in this blog, so you can get your site back on track.

Table of Contents:

What Is Duplicate Content?

Duplicate content in search engine optimisation refers to any content that appears more than once on the web, whether within your own site or across multiple external sites.

This includes exact match or word-for-word content, as well as those that, as Google describes, are ‘appreciably similar.’

To give you an idea, here’s an example:

| Original | Exact Match | Appreciably Similar |

|---|---|---|

| Finding time for exercise can be challenging for busy mompreneurs. But staying active gives you the energy to take care of the kids, run errands and stay on top of the business. | Finding time for exercise can be challenging for busy mompreneurs. But staying active gives you the energy to take care of the kids, run errands and stay on top of the business. | Fitting in an exercise routine can be difficult for mompreneurs. But staying fit is key to having the energy for your kids, errands and business. |

| Here’s a list of exercises that only take 15 minutes (and it doesn’t include running or lifting weights): | Here’s a list of exercises that only take 15 minutes (and it doesn’t include running or lifting weights): | Here’s a list of 15-minute exercises you can easily squeeze into your busy day (no running or weights involved!): |

It’s a rather oversimplified example, but enough to point out that duplicate content often ‘copies’ the structure of the original and has no added value.

That is not to say all duplicate content is created intentionally. In fact, you could have duplicate content on your page right now without knowing it.

For instance, you may be using the same product description on multiple pages of your own site, creating internal duplicate content in the process.

External duplicate content, on the other hand, usually happens when another site copies and pastes your content onto their own sites. This is often the case with content syndication, as well as content theft.

Later on, we’ll explore more ways that duplicate content can occur.

Why is having duplicate content an issue for SEO?

Duplicate content can damage your site’s performance and reputation over time if not promptly addressed. These are the many ways it affects your SEO:

1. Diluted Rankings

Search engines like Google don’t like duplicate content. If two pages on your site have the same content, they’ll struggle to determine which version they should index and rank.

This can lead to diluted rankings as authority and relevance are split between the duplicates, impacting your SEO negatively.

2. Crawl and Indexing Inefficiency

For each website, search engines allocate a specific crawl budget, which influences how often Google crawls a site.

If your site has multiple pages with similar content, search engines might use up the crawl budget on those pages…

So, any new or updated content might be missed and take longer to get indexed.

Furthermore, duplicate content lowers the overall quality of your site. This can lead search engines to reduce your crawl budget, leading to less frequent crawling of your important pages.

3. Poor User Experience

Duplicate content is bad for SEO because it leads to poor user experience.

For instance, imagine having to sift through similar or repetitive content across pages on a website before you find what you’re looking for.

If your site requires your audience to go through such a hassle time and time again, expect them to eventually look for alternatives and avoid revisiting your site again.

Ultimately, poor UX damages your brand’s reputation and decreases traffic to your site.

4. Diluted Link Value

When multiple versions of content exist, any inbound links pointing to those pages are split among them.

For example, if you publish an article on two different URLs – URL A and URL B – and it receives links from four websites, two of those sites might link to URL A and the other two to URL B.

Instead of all links pointing to a single version of the article, the link equity is divided between the two URLs, meaning no single page fully benefits from the incoming links.

Whereas, if there was only one URL, that page would gather all the link equity, making it more likely to rank higher in search results.

5. Potential Penalties

We say ‘potential’ because generally, Google does not penalise duplicate content.

Unless you’re intentionally scraping or copying content from other websites, which is considered plagiarism and thus, illegal…

Most instances of duplicate content are unintentional anyway, which is why search engine penalties rarely happen.

How Duplicate Content Happens

Duplicate content can come from a variety of sources, oftentimes due to unexpected or accidental technical issues or your own content management practices. Here’s a look at some common causes:

1. HTTP and HTTPS Variations

Duplicate content can occur when both HTTP and HTTPS versions of your site are accessible.

This typically happens after transitioning from HTTP to HTTPS for security reasons, but not redirecting the HTTP versions to the HTTPS versions.

For instance, you might have both http://yourwebsite.com and https://yourwebsite.com existing at the same time.

Search engines may see both versions as separate entities, which leads to duplicate content issues.

2. WWW vs Non-WWW Pages

Similar to the matter on http and https, duplicate content issues arise when both the www and non-www versions of your site are accessible.

For example, ‘www.example.com’ and ‘example.com’ might be treated as two different websites. If both URLs house the same type of content, you’ve now created duplicate content.

3. Use of Trailing Slash

Let’s look at the two URL samples below:

- http://example.com/page

- http://example.com/page/

To the human eye, both pages may seem one and the same. But for search engines, they are separate and unique.

If both pages are made accessible to search engines, you can potentially face a duplicate content issue.

4. URL Variations

URL parameters for sorting, filtering or tracking – often added to e-commerce sites – can create multiple URLs with identical content.

When customers sort products by price or filter by size, the page content gets updated and the URL changes to reflect those actions. This creates unique variations, like:

- http://example.com/products?category=shoes

- http://example.com/products?category=shoes&sort=price

- http://example.com/products?category=shoes&size=medium

Each of these URLs may display the same or very similar content, which search engines may interpret as duplicate content.

5. Pagination

Pagination refers to the practice of dividing content across multiple pages.

This is common on e-commerce and news websites, where only a few items are displayed per page. Users can click ‘next’ or ‘page 2’ to view the rest of the items.

Each page in a paginated series typically contains similar content, such as repeated headers, footers and navigation elements. Even some of the main content might overlap or be very similar.

This repetition can cause search engines to view these pages as duplicate content.

6. Session IDs

Session IDs are unique identifiers added to URLs so websites can keep track of user sessions or interactions, like what items they’ve added to their cart or their navigation history.

These session IDs are often appended to the URL as a query parameter.

For instance, if three users visit the same product page, you might end up with:

- http://example.com/product?sessionid=12345

- http://example.com/product?sessionid=67890

- http://example.com/product?sessionid=54321

A new URL with a different session ID is created for every user visiting a particular page, even if the content being viewed is the same.

This results in multiple URLs for the same content, which search engines may interpret as duplicate content.

7. Printer-Friendly Versions

Printer-friendly versions of web pages are much simpler versions designed for easy printing.

These versions focus solely on the core content, omitting unnecessary elements like navigation bars, sidebars, ads and other features.

A printer-friendly page often has a unique URL to differentiate it from the standard version.

For example, a standard article URL might look like this: ‘http://example.com/article’, while the printer-friendly version could be ‘http://example.com/article?print=true’

That said, although the content remains essentially the same, the different URL structure creates multiple versions of the same page, leading to duplicate content issues.

8. Content Management Systems Configuration

CMS platforms often create separate pages for categories, tags and archives, all of which might display the same posts or articles.

For example, a blog post might be accessible through multiple URLs:

- http://example.com/blog-post

- http://example.com/category/blog-post

- http://example.com/tag/blog-post

When these different URLs are indexed by search engines, it creates duplicate content issues, as search engines may see these different URLs as separate pages with the same content.

9. Near-Identical Local Service Pages

Businesses offering services in different cities or places often create multiple pages tailored to and optimised for that specific location.

This localised content generally improves user experience – unless the core content remains almost identical.

For example, a plumbing company might create:

- http://example.com/plumbing-sydney

- http://example.com/plumbing-melbourne

If these pages contain nearly the same descriptions of plumbing services, only differing by city names, addresses and a handful of words, they can be seen as duplicate content.

Even worse, this approach could be seen as a deceptive practice as it involves creating many pages with thin content and no added value.

10. Content Syndication or Republishing

Content syndication involves republishing your original content across websites, usually authoritative ones with a good amount of following, to expand customer reach and enhance brand visibility.

However, if websites that syndicate your content don’t manage it properly, for instance, failing to attach canonical tags and provide appropriate backlinks, search engines can perceive the syndicated content as duplicate.

11. Copied or Scraped Content

You’re a victim of copied or scraped content when your site’s material is republished elsewhere without your permission.

Clearly, it’s not okay to copy content from a site. However, the reality is that many websites still engage in this illegal practice to manipulate search rankings.

Good thing search engine algorithms have become much smarter at identifying the original source. In fact, Google assures us that if you’re the original creator, your website won’t face any negative effects from being scraped.

At times, though, copying content isn’t done with malicious intent, especially in e-commerce. Many websites sell the same items and often copy the manufacturer’s descriptions word for word.

Regardless, copied or scraped content can lead to duplicate content issues.

How to Find Duplicate Content

You can’t fix what you can’t find, so the first step is identifying the duplicate content on your site.

Manually checking your pages is always an option, but as your site grows, so does the potential for errors and misinterpretations. You’re better off spending that time and energy on other strategic SEO tasks.

Instead, here are some efficient approaches to checking for duplicate content.

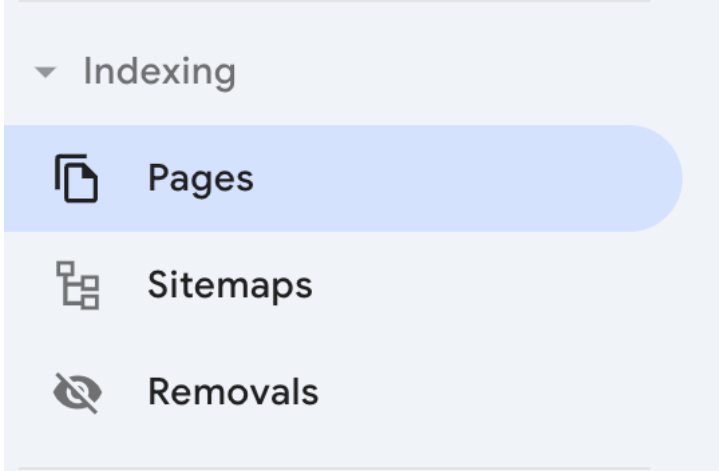

1. Check Indexed Pages on Google Search Console

Google Search Console (GSC) allows you to monitor, maintain and troubleshoot your site’s presence on Google, providing insights into any duplicate content on your site.

In the left side panel of your GSC, navigate to ‘Indexing’ > ‘Pages’.

You’ll be able to see the number of pages that are indexed and those that are not.

Scroll down to see the reasons why pages are not indexed.

If your site is plagued with duplicate content, they will appear in the list of issues.

Click on the item to view all URLs affected by duplicate content.

2. Use Google Search Operators

Google search operators are special commands that help you refine and filter search results based on your requirements.

They are particularly useful when you want to quickly check if there are pages within your domain with duplicate content issues. You can also use it to check external sources of duplicate content, possibly from scraped or copied content.

To identify internal duplicate content, use these operators:

- ‘site:’

When you input ‘site:[your domain url]’ in Google search, the results will show the total number of your web pages that Google has indexed.

Ideally, this number should match the total number of unique pages (or be close to it) on your website.

Say, you have made 100 unique pages but the Google results show 500 pages. It’s likely that 400 of those pages are duplicates.

- ‘site:’ + ‘intitle: ’

Using the operator ‘site:[yourdomain] intitle: [keyword]’ is particularly helpful when you suspect that you have multiple pages targeting the same keyword.

This returns a list of all indexed pages on your site that have the specified keyword in their titles, helping you easily spot internal duplicate content.

To identify duplicate content coming from external sources, simply use the ‘intitle: [site title]’ operator. This will return a list of pages from other sites with titles exactly matching yours.

3. Use an SEO Tool

SEO tools that perform site audits can scan your website for SEO issues, including duplicate content. Some popular industry tools include:

- Screaming Frog

- SEMrush

- Ahrefs

What’s great about these tools is they provide in-depth analysis and practical recommendations to fix duplicate content issues, as well as other problems affecting your site’s overall health and performance.

It’s pretty straightforward. You select a tool, run a crawl, wait for the analysis, and then review the detailed report.

Alternatively, you can use tools specifically designed to detect duplicate content, such as Copyscape and Siteliner. Both are excellent at pinpointing internal and external duplicate content issues.

How to Avoid Duplicate Content in SEO

Now that you understand how duplicate content happens and the ways to identify it, how do you deal with it and make it disappear?

Here are some solutions and best practices to effectively avoid and fix duplicate content issues.

1. Canonical Tags

A canonical tag (rel=”canonical”) is an HTML element you add to the page’s code, specifically in the <head> section, to point out which among the many versions of the page search engines should rank.

This way, Google won’t have to guess which page to prioritise. You eliminate the risk of it choosing the wrong version.

If you recall the issues we discussed earlier, canonicalization should address most of them.

For example, if you have three similar pages: Page A, Page B and Page C, and you want Page A to be the primary version, you should add a canonical tag on Pages B and C pointing to Page A.

You can do this by adding the following line in the <head> section of Pages B and C:

<link rel=”canonical” href=”http://example.com/page-a” />

Note that this does not deindex Page B and Page C. Both users and search engines will continue to have access to these pages, but the link equity and search ranking will go to Page A.

It’s also good practice to put a canonical on the original version itself, in this case, Page A. This is called a self-referencing canonical.

This solidifies a page as the original source and helps get rid of duplicate content issues caused by URL variations (e.g. with and without trailing slashes, or HTTP vs. HTTPS).

Moreover, it also protects against content scrapers, as well as those who mishandle syndicated content.

2. 301 Redirects

301 redirect is a permanent redirect from one URL to another.

It works this way: if someone visits an old page ‘https://oldversion.com’, they’ll be automatically led to the new location ‘https://newversion.com’.

Search engine crawlers will follow the same path, ensuring they index the updated page instead.

This method is useful when you’re migrating to a new domain or deleting a page from your website.

It syphons traffic and link equity from your old page to the new one, and at the same time, eliminates duplicate content issues.

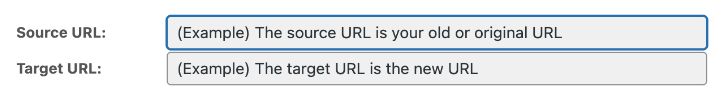

An easy way to do this on WordPress is to download a redirection plugin, where you simply input the source URL and target URL.

3. Combining Near Identical Content

When you consistently put out new content, there comes a time when you might end up repeating topics you’ve already covered, whether intentionally or not.

For example, one who runs a fitness blog might already have published ‘beginner workout tips’ and ‘fitness basics’, both of which likely cover the same ground.

To avoid duplicate content issues, create a comprehensive post that fully addresses your readers’ questions and queries.

This approach not only prevents duplicate content but also enhances the reader’s experience by providing all the necessary information in one place.

Additionally, apply a 301 redirect from the outdated pages to the new one to transfer traffic and authority to the updated content.

4. ‘Meta Noindex, Follow’ Directive

When handling tag or category pages that might be contributing to duplicate content on your site, the Meta Noindex,Follow directive is very useful.

This directive prevents these pages from being indexed but still allows search engines to follow the links they contain. This means that while the page itself won’t appear in search results, any links on the page can still pass link value to other pages.

To apply this, simply add the meta tag in the head section of the HTML in the format below:

<meta name=”robots” content=”noindex, follow”>

5. Requesting Removal of Content

In case you find your content copied and the duplicate ends up outranking your original, reach out to the website owner and request content removal.

If that doesn’t help, take further action by reporting the website to Google and requesting to take down plagiarised content. To do this, simply complete Google’s Legal Troubleshooter.

6. Unique, Original Content

Most importantly – and it cannot be stated enough – write content that’s uniquely yours.

Draw on data from your own analytics and share genuine stories about your experiences with people or clients on the topic.

For location pages that might naturally seem similar, add unique features, stories, or specific FAQs relevant to each location. This approach signals to Google that you’re not just creating pages for the sake of it, but providing real value to your users.

Is there an acceptable amount of duplicate content?

The reality is that SEO managers and specialists juggle many tasks and don’t have all the time in the world to fix all duplicate content issues.

This begs the question: how much duplicate content is acceptable?

Well, Google has never set down a specific number or threshold for what’s considered acceptable.

But this is what we recommend:

For those who are short on time, focus on assessing and monitoring your top-performing pages and those pages targeting high-intent keywords (such as your lead magnets).

Remember that the context of duplicate content is also important. So, don’t bother looking into duplicate content in footers, headers, or navigation elements. They’re expected to appear on multiple pages, which aren’t problematic at all.

Lastly, if you’re still unsure whether to address duplicate content, ask yourself if each page still provides the best experience for readers and contributes to a positive user experience.

If the answer is yes, you’re on the right track.

Need a pro to fix duplicate content?

Fixing duplicate content issues sooner than later can be your ticket to moving up to Google’s first page.

With a dedicated SEO agency, you won’t have to spend countless hours sorting through duplicates. We’ll handle the hard work so you can focus on running your business!

At KBA Web SEO Sydney Agency, we have duplicate content in our heads when planning your business’s SEO strategy. We take care of it early so we can focus on better strategies that move your site forward.

Book a call today!